ContextBuilder

The ContextBuilder is a pytorch neural network architecture that supports scikit-learn like fit and predict methods. The ContextBuilder is used to analyse sequences of security events and can be used to produce confidence levels for predicting future events as well as investigating the attention used to make predictions.

The context_builder.ContextBuilder.__init__() constructs a new instance of the ContextBuilder.

For loading a pre-trained ContextBuilder from files, we refer to context_builder.ContextBuilder.load().

- ContextBuilder.__init__(input_size, output_size, hidden_size=128, num_layers=1, max_length=10, bidirectional=False, LSTM=False)[source]

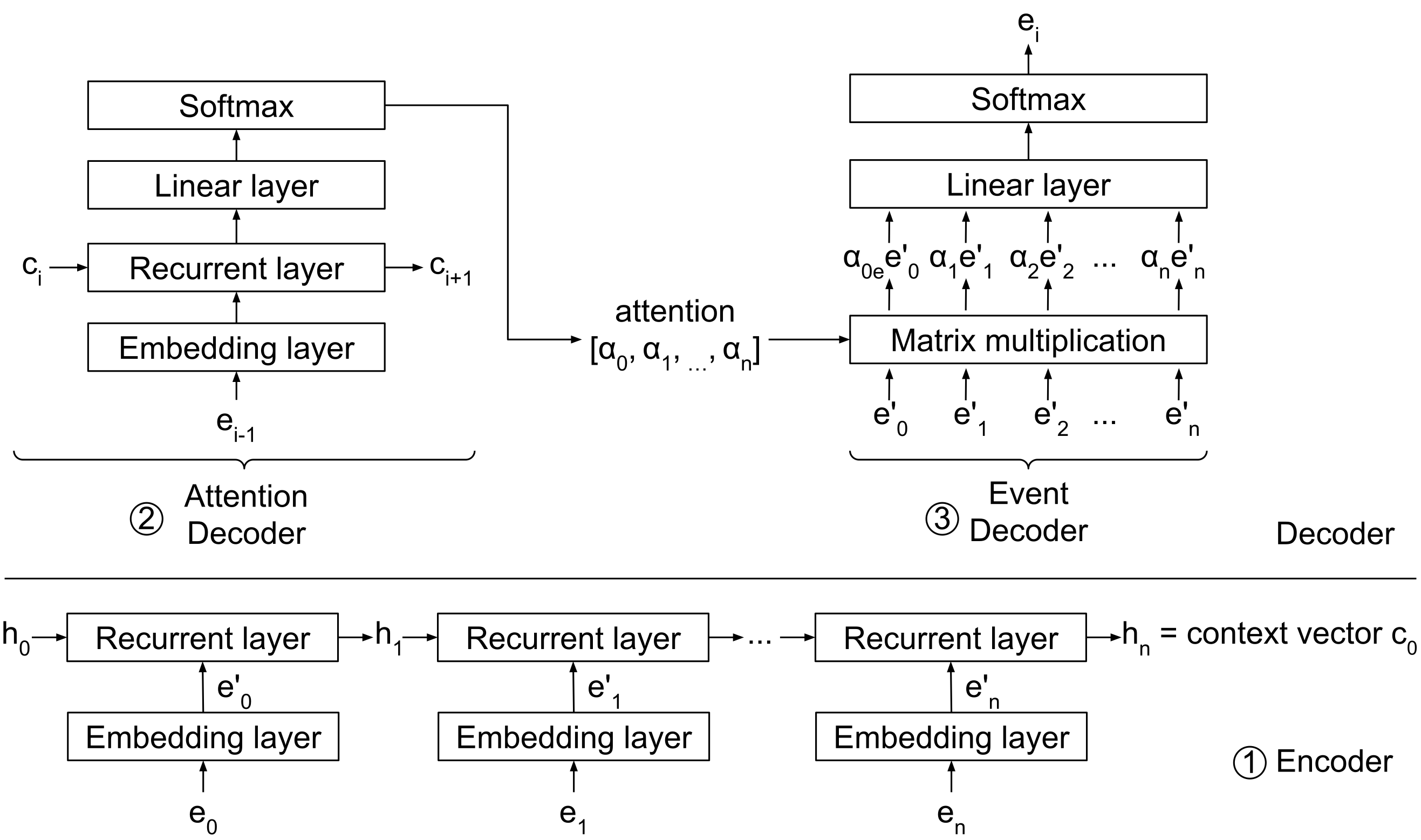

ContextBuilder that learns to interpret context from security events. Based on an attention-based Encoder-Decoder architecture.

- Parameters:

input_size (int) – Size of input vocabulary, i.e. possible distinct input items

output_size (int) – Size of output vocabulary, i.e. possible distinct output items

hidden_size (int, default=128) – Size of hidden layer in sequence to sequence prediction. This parameter determines the complexity of the model and its prediction power. However, high values will result in slower training and prediction times

num_layers (int, default=1) – Number of recurrent layers to use

max_length (int, default=10) – Maximum lenght of input sequence to expect

bidirectional (boolean, default=False) – If True, use a bidirectional encoder and decoder

LSTM (boolean, default=False) – If True, use an LSTM as a recurrent unit instead of GRU

Overview

The ContextBuilder is an instance of the pytorch nn.Module class. This means that it implements the functionality of a complete neural network. Figure 1 shows the overview of the neural network architecture of the ContextBuilder.

Figure 1: ContextBuilder architecture.

The components of the neural network are implemented by the following classes:

These additional classes implement methods for training the ContextBuilder:

The ContextBuilder itself combines all underlying classes in its forward() function.

This takes the input of the network and produces the output by passing the data through all internal layers.

This method is also called from the __call__ method, i.e. when the object is called directly.

- ContextBuilder.forward(X, y=None, steps=1, teach_ratio=0.5)[source]

Forwards data through ContextBuilder.

- Parameters:

X (torch.Tensor of shape=(n_samples, seq_len)) – Tensor of input events to forward.

y (torch.Tensor of shape=(n_samples, steps), optional) – If given, use value of y as next input with probability teach_ratio.

steps (int, default=1) – Number of steps to predict in the future.

teach_ratio (float, default=0.5) – Ratio of sequences to train that use given labels Y. The remaining part will be trained using the predicted values.

- Returns:

confidence (torch.Tensor of shape=(n_samples, steps, output_size)) – The confidence level of each output event.

attention (torch.Tensor of shape=(n_samples, steps, seq_len)) – Attention corrsponding to X given as (batch, out_seq, in_seq).

Fit/Predict methods

We provide the ContextBuilder as a classifier to learn sequences and predict the output values. To this end, we implement scikit-learn like fit and predict methods for training and predicting with the network.

Fit

The fit() method automatically trains the network using the given input data X and y, while allowing the user to set various learning variables such as the number of epochs to train with, the batch_size and learning_rate.

Please see the method below for all available options.

- ContextBuilder.fit(X, y, epochs=10, batch_size=128, learning_rate=0.01, optimizer=torch.optim.SGD, teach_ratio=0.5, verbose=True)[source]

Fit the sequence predictor with labelled data

- Parameters:

X (array-like of type=int and shape=(n_samples, context_size)) – Input context to train with.

y (array-like of type=int and shape=(n_samples, n_future_events)) – Sequences of target events.

epochs (int, default=10) – Number of epochs to train with.

batch_size (int, default=128) – Batch size to use for training.

learning_rate (float, default=0.01) – Learning rate to use for training.

optimizer (optim.Optimizer, default=torch.optim.SGD) – Optimizer to use for training.

teach_ratio (float, default=0.5) – Ratio of sequences to train including labels.

verbose (boolean, default=True) – If True, prints progress.

- Returns:

self – Returns self

- Return type:

self

Predict

The predict() method outputs the confidence values for predictions of future events in the sequence and attention values used for each prediction.

The steps parameter specifies the number of predictions to make into the future, e.g. steps=2 will give the next 2 predicted events to occur.

- ContextBuilder.predict(X, y=None, steps=1)[source]

Predict the next elements in sequence.

- Parameters:

X (torch.Tensor) – Tensor of input sequences

y (ignored) –

steps (int, default=1) – Number of steps to predict into the future

- Returns:

confidence (torch.Tensor of shape=(n_samples, seq_len, output_size)) – The confidence level of each output

attention (torch.Tensor of shape=(n_samples, input_length)) – Attention corrsponding to X given as (batch, out_seq, seq_len)

Fit_predict

The fit_predict() method performs the fit() and predict() functions in sequence on the same data.

- ContextBuilder.fit_predict(X, y, epochs=10, batch_size=128, learning_rate=0.01, optimizer=torch.optim.SGD, teach_ratio=0.5, verbose=True)[source]

Fit the sequence predictor with labelled data

- Parameters:

X (torch.Tensor) – Tensor of input sequences

y (torch.Tensor) – Tensor of output sequences

epochs (int, default=10) – Number of epochs to train with

batch_size (int, default=128) – Batch size to use for training

learning_rate (float, default=0.01) – Learning rate to use for training

optimizer (optim.Optimizer, default=torch.optim.SGD) – Optimizer to use for training

teach_ratio (float, default=0.5) – Ratio of sequences to train including labels

verbose (boolean, default=True) – If True, prints progress

- Returns:

result – Predictions corresponding to X

- Return type:

torch.Tensor

Query

The query() method implements the attention query from the DeepCASE paper.

This method tries to find the optimal attention vector for a given input, in order to predict the known output.

- ContextBuilder.query(X, y, iterations=0, batch_size=1024, ignore=None, return_optimization=None, verbose=True)[source]

Query the network to get optimal attention vector.

- Parameters:

X (array-like of type=int and shape=(n_samples, context_size)) – Input context of events, same as input to fit and predict

y (array-like of type=int and shape=(n_samples,)) – Observed event

iterations (int, default=0) – Number of iterations to perform for optimization of actual event

batch_size (int, default=1024) – Batch size of items to optimize

ignore (int, optional) – If given ignore this index as attention

return_optimization (float, optional) – If given, returns number of items with confidence level larger than given parameter. E.g. return_optimization=0.2 will also return two boolean tensors for elements with a confidence >= 0.2 before optimization and after optimization.

verbose (boolean, default=True) – If True, print progress

- Returns:

confidence (torch.Tensor of shape=(n_samples, output_size)) – Confidence of each prediction given new attention

attention (torch.Tensor of shape=(n_samples, context_size)) – Importance of each input with respect to output

inverse (torch.Tensor of shape=(n_samples,)) – Inverse is returned to reconstruct the original array

confidence_orig (torch.Tensor of shape=(n_samples,)) – Only returned if return_optimization != None Boolean array of items >= threshold before optimization

confidence_optim (torch.Tensor of shape=(n_samples,)) – Only returned if return_optimization != None Boolean array of items >= threshold after optimization

I/O methods

The ContextBuilder can be saved and loaded from files using the following methods.

Please note that the context_builder.ContextBuilder.load() method is a classmethod and must be called statically.

- ContextBuilder.save(outfile)[source]

Save model to output file.

- Parameters:

outfile (string) – File to output model.

- classmethod ContextBuilder.load(infile, device=None)[source]

Load model from input file.

- Parameters:

infile (string) – File from which to load model.

Example:

from deepcase.context_builder import ContextBuilder

builder = ContextBuilder.load('<path_to_saved_builder>')

builder.save('<path_to_save_builder>')